Highpoint RocketRAID 3510 SATA II RAID Controller

Jun 25th, 2009 | By Anthony

Highpoint RocketRAID 3510 SATA II RAID Controller

Date

: 06/25/09 – 05:25:45 PM

Author

:

Category

: Storage

Page 1 : Introduction

Manufacturer

: HighPoint Technologies, Inc.

More and more today solid state drives are making a bigger presence in the world of hard drives. Over conventional mechanical drives, there are some obvious benefits: speed, fast latency times, lack of moving parts to name a few. Although still expensive, laptops and desktop performance seekers have taken a fond liking for them. With our current generation of solid state drives vastly improving upon the already fairly impressive previous generation, solid state drives are an more than ever an attractive option for upgrades. For a line of products in such a relative state of youth, they have done quite well.

For over a decade and a half Highpoint has been developing and producing RAID solutions and along with a small handful of companies have become the forerunners today. Highpoint has enjoyed quite a bit of success both with enterprise level products and with entry level home RAID solutions and have built up quite an impressive track record with customers including Google, SunMicro, Maxtor, Hitachi, San Disk, HP, Lenevo and the list goes on.

Page 2 : Features and Specifications

For a mid range priced card, the RocketRAID 3510 packs in the features. Built around the Intel IOP341 I/O processor and 256MB of DDR II ECC memory, on paper at least, the RocketRAID 3510 looks like it could be quite the contender.

The RocketRAID 3510 being a more affordable member of the 3xxx family of RAID card aims at bringing high end RAID performance to the home user, unfortunately compared to its higher end counterparts a few features have been removed, most notably the 3510 is a four channel SATA II card, not eight.

When we last looked at Highpoint's RocketRAID 2680 and 4320 we were quite fond its various RAID software management systems, although perhaps not the most polished interface Highpoint won some big points with functionality. The RocketRAID 3510 takes after the same- BIOS setup, web interface, background/ foreground initialization and so on.

Not to spend all of our time discussing features, let's jump right in and have a look at what Highpoint has sent us!

Page 3 : Package and Content

The RocketRAID 3510 ships in a typical cardboard box with an ample of room to spare for packaging to keep the card inside safe.

The boxes are plain and without the typical flashy graphics found on other hardware. I have to assume that Highpoint found it doubtful that fancy graphics and foil prints would sway one's purchasing decisions with RAID devices and I would agree.

On the front, we have a quick rundown of key features.

Towards the back we have a more in-depth listing of features and specifications. Of course, Highpoint's website provides more detail.

Inside, we have a manual, driver CD, a short back cover, one mini SAS to 4x SATA cable and most importantly the RAID card itself.

Let's start with the card.

The RocketRAID 3510 is a small, budget video card sized, single slot card. Physical size compatibility should not be an issue.

On the back we have a single mini SAS connector, and an empty space where a second connector would in the case of the RocketRAID 3520, the 3510's more expensive sibling and eight channel equivalent.

Being a basic model of the 3xxx family, the RocketRAID 3510 doesn't have anything fancy on the back like an Ethernet port, external SATA or SAS connector.

Page 4 : Installation

Installation is two parts, obviously; hardware and software. The greater portion of installation deals with software, but we'll begin with the physical installation.

Physical installation is no more than sliding the card into place, and affixing to the system chassis.

With the card in place, plug in the data cables and power cables to our hard drives and we're set!

Page 5 : Software Setup

With the card installed, the RocketRAID 3510's initialization screen should precede the post screen. Hit CTRL+ H to enter the BIOS.

On the main screen, we have a listing of hard drives attached to the RAID card.

Navigate the menu with the arrow keys and enter to select.

The create menu gives a drop down box with various RAID configuration.

With an array level selected the next box presents various configuration options, in this case we selected RAID 0. With drives, block size, capacity and so on selected, hit create.

And we are done! Next we will look at the web based setup.

Page 6 : Web Interface

Physical access to the system isn't always possible, especially in a larger work space, or if the system isn't even on site! Local or not, a web RAID management feature will sure come in handy.

Use the default username: RAID and default password: hpt to log into the system.

As we just created a RAID 0 array through the BIOS, it is shown in the first section. Otherwise, hit Create Array to proceed with setup.

The same settings we dealt with earlier, only on a nicer looking interface!

With an existing array, the Maintenance button allows the user to remove, expand and manage the array without disrupting service.

Through the Manage menu, individual disk settings can be set.

The menu to the right of that is an event log.

Lastly, for hard drive health and status, click SHI or Storage Health Inspector.

Page 7 : Testing Setup

Since we last looked at RAID cards, our methodology has evolved quite a bit with the addition of a few new tests along with revamping old ones. First we will begin with system specifications.

We will be using four pieces of software today, Iometer, SiSoftware Sandra and Intel's Storage Performance Toolkit along with NAS Performance Toolkit.

The bulk of our testing will be centered with Iometer. We will look at throughput, sequential, random performance and I/O performance.

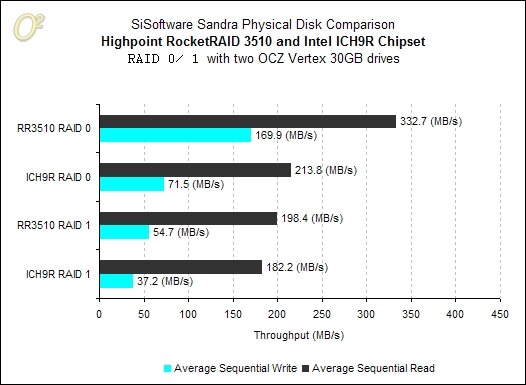

The second piece of software we will be using today is SiSoftware Sandra's Physical Disk benchmark to look at sequential read and write across the disks.

For real world testing, or what a user could reasonably expect to experience we will be repurposing Intel's NAS Performance Toolkit. Intel's NASPT offers a number of strengths. First and foremost: consistency. With NASPT's built in traces, performance numbers resulting in various tests are easily emulated across various systems and gives insight into how a system would perform under real world conditions. Unlike with our more elementary hardware level tests, we are not interested in separating software environmental factors. Day to day storage usage is heavily dependent on system software, operating systems and so on. With the trace files, we can simulate specified hard drive activity down to the distribution of random and sequential reads/ writes, the spread of data over individual platters, or locality, and a number of access characteristics.

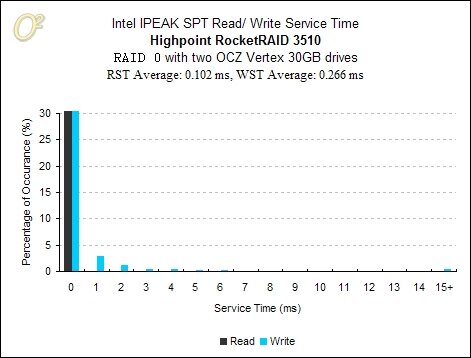

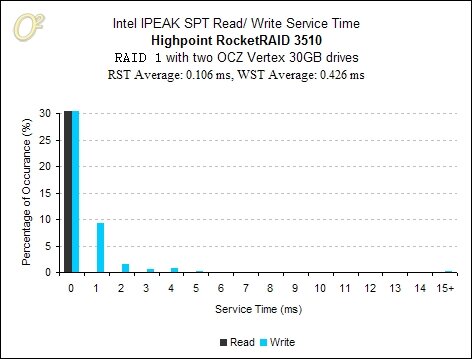

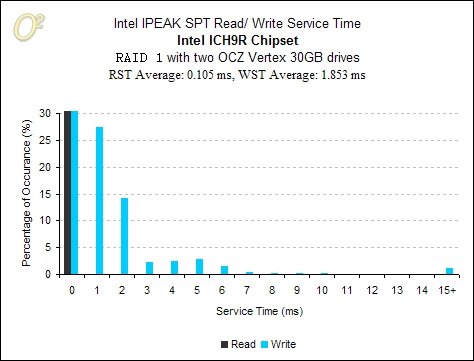

Our last piece of software we will deal with today is Intel's IPEAK Storage Performance Toolkit.

Using a battery of both user level and system level tests we can better and more accurately gauge performance and get a better idea of what users can expect. Our tests will include two platforms and two RAID configurations. The RocketRAID 3510 will run head to head with the ICH9R chipset on a DFI X38 motherboard in both RAID 0 and RAID 1 configurations.

Page 8 : Iometer I/O Performance

It is difficult with conventional hard drive testing methods, which simply yield an average throughput value, to accurately get an idea for a system's performance. For our first set of tests we will be looking at IO performance, or the number of requests completed by the array per second.

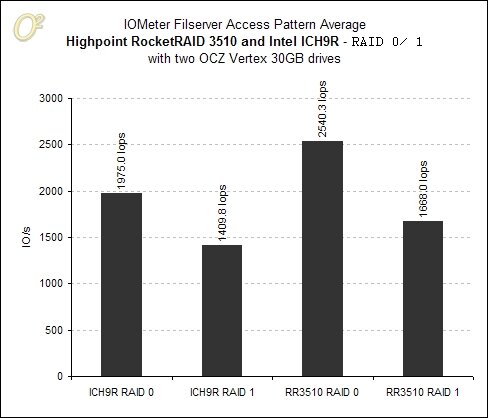

With Intel's defined File Server access pattern, we can simulate a typical file server environment and specify the distribution of file sizes, percentage of read, write and randomness.

With a queue depth ranging from 1 to 256, or overall load, we have a pretty clear line up; with the RocketRAID 3510 in RAID 0 placing first, followed by Intel's ICH9R chipset in RAID 0 second. Between the two setups in RAID 1, the RocketRAID 3510 placed third trailing ever so slightly behind the ICH9R chipset in a RAID 0 configurations.

With mechanical drives IO performance tends to increase as the number of outstanding IO's increase, this is due the hard drive queuing operations concurrently while performing IO operations. With solid state drives however, latency times for both reading and writing are often incredibly fast- given a typical mechanical drive read access time is usually around 8ms to 9ms, solid state drives are usually nearing a tenth of a millisecond. Thus such drives tend to maintain a fairly steady level of IO performance and queue depth is usually negligible.

Page 9 : IPEAK SPT CPU Load

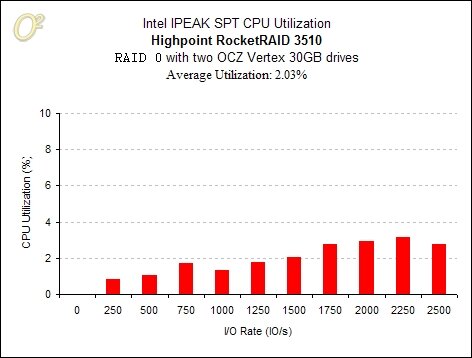

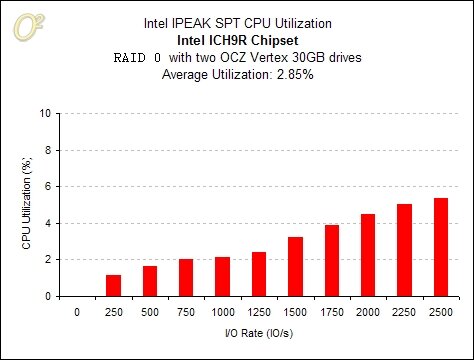

One of the biggest benefits with hardware based RAID solutions is that hardware RAID cards have their own processors. By offloading processing the system's CPU would have needed to do to a dedicated I/O processor the system can free up some CPU cycles. While the difference in performance would probably be more significant with higher level RAID configurations such as RAID 5/6 with our tests we recorded some differences in system CPU load with RAID 0 and 1.

In RAID 0, the system with the RocketRAID 3510 kept a CPU utilization of around 2% while with the chipset CPU utilization increased accordingly to the system I/O rate. It wouldn't be unreasonable to visually, and roughly extrapolate that the trend of increased CPU utilization would continue given that our X axis reaches an upper limit of 2500 Iops.

With RAID 1 things are a bit different. The RocketRAID 3510 kept CPU utilization hovering around 3% and with an average of about 2.3% while with the ICH9R chipset half of the test remained under 2% while any I/O rate above 1500 resulted in a heavier CPU utilization of 4% with an average of about 2.9%.

Page 10 : Iometer Sequential and Random Throughput

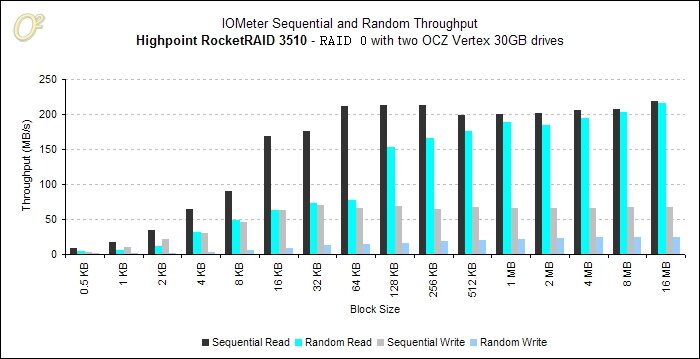

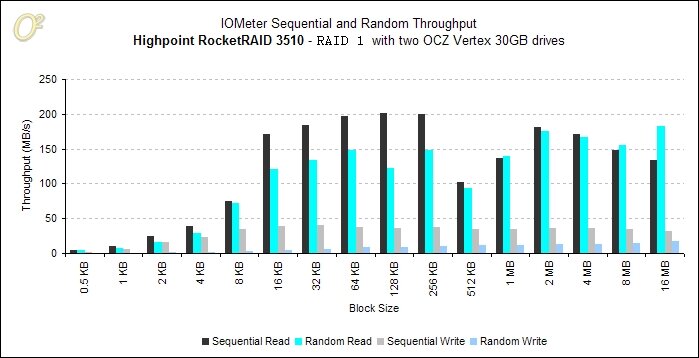

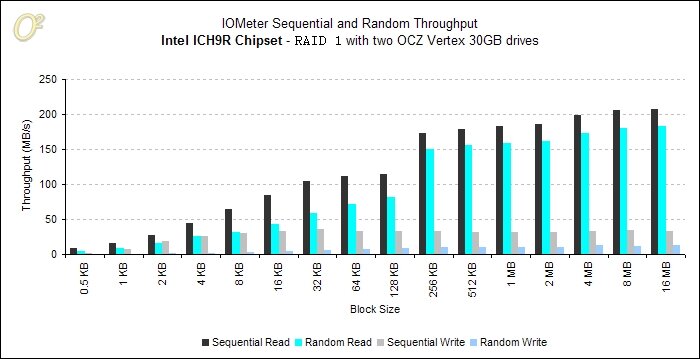

Our next measure of performance will be again, Iometer. Only this time we will be looking at throughput; read/write with both sequential and random access through different block sizes.

Throughput is measured in two ways, sequential and random. Reading and writing sequential data is of course much faster than random access due to the time it takes for the disk head on the actuator arm of a hard drive to position itself and either retrieve or write data, or at least on a conventional mechanical hard drive. Still, even with SSDs the system has to pull bits of data from all around the hard drive.

Where high I/O performance takes the front seat with applications where a high number of transactions are necessary raw throughput performance accounts for moving, writing and reading files on the hard disk.

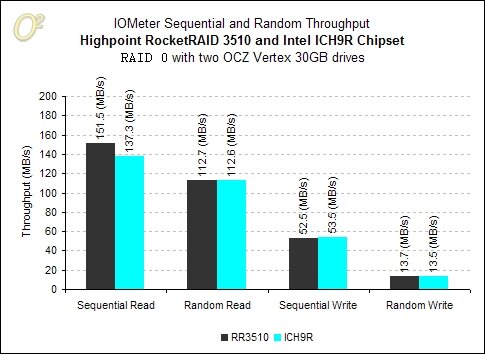

I'll be the first to admit, graphs comparing throughput across different block sizes gets messy. Although they do convey some important information such as the distribution of throughput between lower and higher range block sizes, looking at averages gives a much better picture for comparisons.

In RAID 0, our average figures are roughly the same between both platforms except for sequential read which averaged out to 150 MB/s.

Our RAID 1 configuration showed the RocketRAID 3510 clearly taking the lead in each test.

In reporting, Iometer is focused more on multi- user type environments and accordingly the figures it generates are reflected.

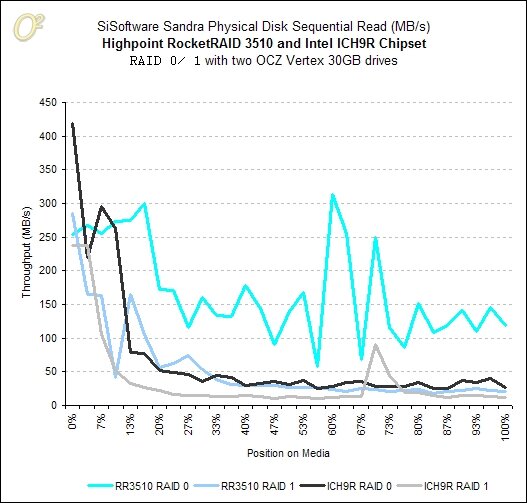

Page 11 : SiSoftware Sandra Throughput

The strengths of Iometer is its ability to simulate a multi- user and network performance however due to its inability to simulate localized hard drive activity its reported values are typically lower than what they would be in a single user environment. On the other hand, more popular benchmarking software such as HD Tach, ATTO, SiSoft Sandra, HD Tune and so on are all designed to simulate a single user environment.

With SiSoftware Sandra, the difference in performance with a hardware RAID based solution is more apparent. Our first graph looks at read throughput. In first place we have the RocketRAID 3510 in RAID 0, last place we have ICH9R in RAID 1 and in second place we have roughly both the ICH9R in RAID 0 and RocketRAID 3510 in RAID 1.

Looking at write performance we have a similar placement, again with the RocketRAID 3510 in RAID 0 placing first.

Looking at our averages graph, the RocketRAID 3510 in RAID 0 performed spectacularly.

Page 12 : IPEAK SPT Read/ Write Service Time

With mechanical drives access latency occurs predominantly in the stages where the hard drive spins the platter into position and the actuator arm swinging the head into place to either read or write data. Given SSD type drives have no moving parts; access latency tends to be a lot quicker. Where with mechanical drives read access latency typically falls in the 8ms to 9ms range it isn't common for SSD drives to have read times in the range of a tenth of a millisecond.

While there were no significant differences in read times, with write service times the results were quite a different story. On the ICH9R chipset our write service time average was 0.75ms whereas the RocketRAID 3510 averaged 0.27ms.

Our RST (read service time) average in RAID 1 between the two was about the same, but again with WST the RocketRAID 3510 was the clear winner with an average just above 0.4ms. The ICH9R chipset averaged 1.8ms.

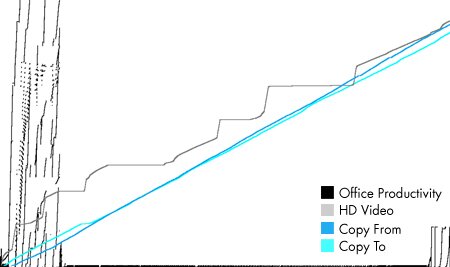

Page 13 : NASPT HD Video Playback and Office Productivity

For our last set of tests, we will revisit throughput this time with Intel's NAS Performance Toolkit. The two benchmarks we will look at are HD Video playback and Office productivity. Playing back a video involves sequential access while Office Productivity involves random access with a combination of reading and writing.

For clarity, the above image visually represents disk access, where the vertical access represents location on the disk and the horizontal access represents the total duration of a the test. For comparison, straight copying to or from a disk is represented by the two blue lines. The duration of the test is determined by the speed in which each configuration completes the assigned task and thus correlates with throughput.

Starting with HD Video, both RAID configurations with the ICH9R chipset averaged out to about the same throughput rate. The RocketRAID 3510 on the other hand clearly took the lead here by a wide margin.

With random access and a combination of both reading and writing thrown into the picture all configurations except the RocketRAID 3510 in RAID 0 achieved similar throughput rates.

Comparing between the ICH9R chipset and the RocketRAID 3510, there are clear performance advantages with a hardware based RAID solution. Consistently, the RocketRAID 3510 placed first.

Page 14 : Conclusion

With today's review, we had two goals in mind: one, of course evaluate the RocketRAID 3510 but two, provide some insight into the benefits of hardware RAID in regards to solid state storage drives. First let's talk about the card itself.

The RocketRAID 3510 is amazingly fast. For a mid range priced card it provides excellent performance and although we only had two SSD drives to test with, the card showed some excellent capabilities. In terms of features the RocketRAID 3510 had all the goods we enjoyed with the RocketRAID 2680 and 4320 cards; an excellent web interface, easy to navigate system BIOS, and was easy to setup.

In term of SSD performance, the RocketRAID 3510 absolutely boosts performance. Given the couple hundred megabytes per second that can be crammed through a SSD, it is reasonable to suggest that some hardware support would help make the most of performance. Consistently through our benchmarks we found the RocketRAID 3510 taking the lead, and at times by rather big margins.

In terms of cost however, the RocketRAID 3510 is priced in the mid $300 range so it definitely isn't budget. Our review today specifically dealt with the benefits of hardware RAID with regards to solid state drives and while we can't say for certain such performance gains would be proportional with the usage of mechanical drives, but that wasn't the intent here today. Solid state drives on their own are expensive, per gigabyte it is one of the most cost ineffective solutions available, but the purpose of these devices isn't to boast storage capacity, but rather speed. In terms of feasibility, for a typical high end system, gaming setup or simply booting an operating system faster perhaps solid state drives on their own are quite sufficient, and if for nothing else, price. But for a more data intensive application like a centralized file server or a system which deals with stacks of digital content on a daily basis a RAID setup has some clear benefits. Of course, in this case storage capability isn't particularly a strength, such a system would need to move files at a jaw dropping rate.

All in all, hardware RAID performance with solid state drives improves upon software RAID. Expensive? Yes, but we are an overclocking website, and we do love performance.

Advantages

- Excellent performance

- Flexible software suite

Disadvantages

- Price

Overclockers Online would like to thank HighPoint for making this review possible.